Early last year Google changed some of the underlying technology used in their process of of handling websites they suspect of being hacked (which leads to a “This site may be hacked” message being added to listings for the websites on Google’s search results). More than a year later we are still finding that the review process for getting the”This site may be hacked” message removed after cleaning up such a website is in poor shape and likely lead leading to a lot of confusion for people trying to navigate it if they don’t deal with it’s problems on regular basis (like we do). While we think that what Google is doing by warning about these situations is a good thing, the current state of the review process is not acceptable.

To give you an idea of what are people are dealing with lets take a look at what we just dealt with while getting Google to clear a website we had cleaned up.

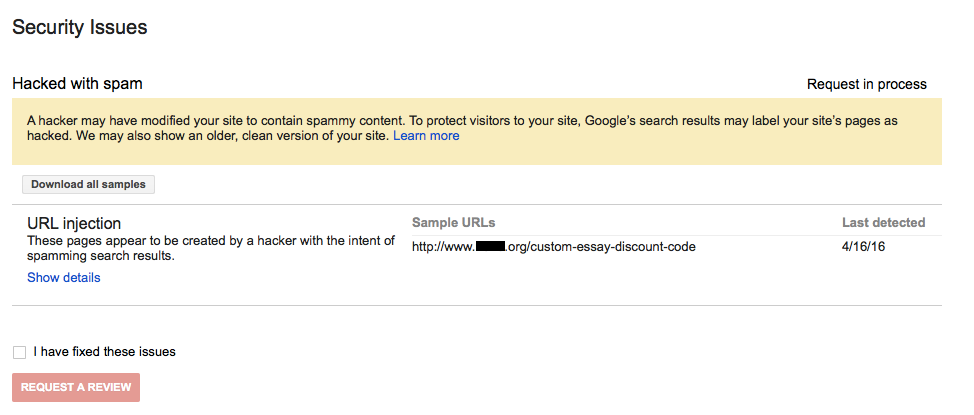

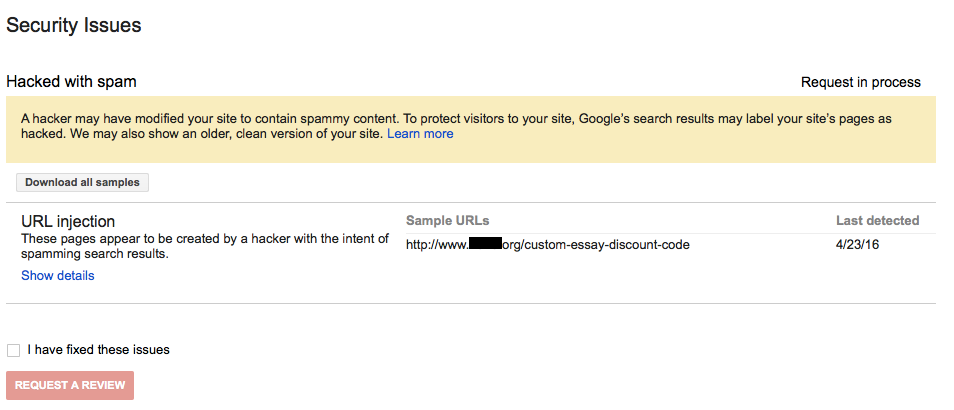

Once you have cleaned a website with the “This site may be hacked” message, you need to add the website to Google’s Search Console and then you can request a review in the Security Issues section of that. That section will also give you information on what Google detected:

In this case Google detected that spam pages were being added to the website, which they refer to as an URL injection.

Before requesting a review last Monday, we doubled checked that the spam pages no longer existed using the Fetch as Google tool in the Search Console, which allows you to see that what is served when a page is requested by Google. The URL they listed on the Security Issues page was “Not found” when we used the tool, indicating that the spam page was no longer being served to Google.

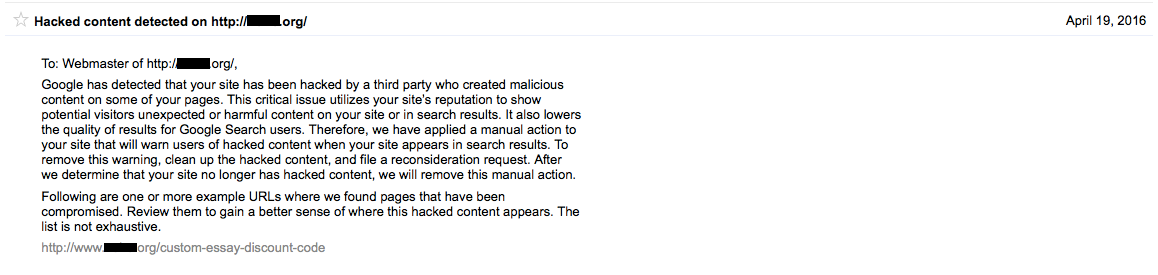

On Tuesday a message was left in Google’s Search Console for the non-www version of the website’s domain indicating that hacked content had been detected:

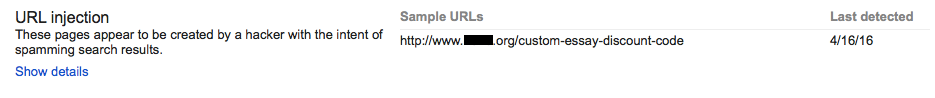

Considering that Google was already listing the website as having a security issue for several days you might think this was a new detection, but it wasn’t. In the security issues section it still listed the old last detected date:

Using the Fetch as Google tool in the Search Console we requested the URL again and it was still “Not found”:

![]()

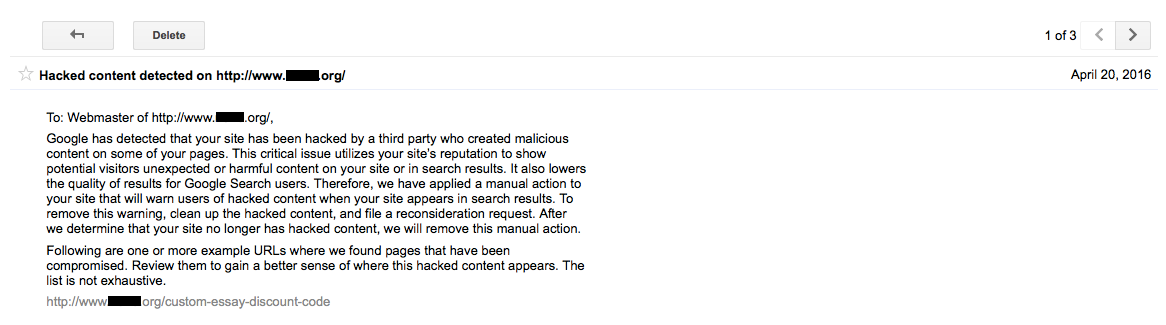

Then on Wednesday the same message was left for the www version of the domain:

Again the last detected date in the Security Issues section hadn’t been changed and the using the Fetch as Google too the URL was still “Not found”:

![]()

Then on Saturday the Security Issues page indicated that URL injection had been detected as of that day:

We again used the Fetch as Google tool and it was still “Not found”:

![]()

At this point we also checked the website over to make sure the malicious code hadn’t returned and it hadn’t.

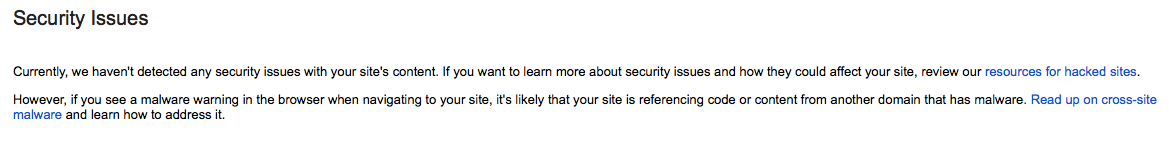

Then this morning the warning was gone from the search results and the Security Issues page was clear:

Considering that nothing changed between Saturday and today, that detection on Saturday would seem to be some kind of a mistake. Seeing at the page wasn’t even being found this doesn’t seem like an understandable false positive, but something seriously wrong with their system. If you weren’t aware of that how problematic the process is, you might have been very concerned upon seeing the new false detection.

The fact that it took them a week to finally clear the website also doesn’t seem to be an acceptable in this case.

I am also having a false-positive on a URL Injection.

The site in question was hacked 3 weeks ago and as a consequence was moved to new hosting and thoroughly cleaned up/restored from an older backup. It has had 3 separate scans over it from three different virus/malware scanners, we’ve searched the server and database contents for all the backdoor keywords to check the scans did the job. They did. Site is clean.

BUT… everyday, the ‘Last Detected’ date on this one URL updates each and every day (bear in mind that the site created 1000s of dodgy URLs when it was hacked, why would one still work???). I have used Fetch And Render on the URL several times and it just 404s.

I have since gone through the access logs (a lot of hard word) and seen that it is a visit from Bingbot each day, which for some reason I cannot understand, triggers a 301 which then redirects to the 404 page. As it’s only one link on Bing, I have requested its removal using Bing’s Webmaster Tools. Thank god it’s only one link, had it been loads, I don’t know how this would have been resolved.

I’m not sure how Google registers these URL injections, but it isn’t really working correctly if it’s picking up 301 redirects to a 404. Perhaps Microsoft need to read this too. I hope they investigate this as it could be really problematic for businesses.